Install and configure Pre-requisite

yum install -y java-1.8.0-openjdk

fornodes -n 'yum install -y java-1.8.0-openjdk'

[root@hdm01 Downloads]# cd /root/Downloads

[root@hdm01 Downloads]# wget https://archive.apache.org/dist/hadoop/common/hadoop-3.2.2/hadoop-3.2.2.tar.gz

##### unpack to directory of your choice(/opt)

[root@hdm01 opt]# tar xvfz hadoop-3.2.2.tar.gz

##### set HADOOP_HOME to point to that directory.

[root@hdm01 opt]# echo 'export HADOOP_HOME=/opt/hadoop-3.2.2' >>/etc/profile

##### add $HADOOP_HOME/lib/native to LD_LIBRARY_PATH.

[root@hdm01 ~]# echo 'export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$HADOOP_HOME/lib/native' >>/etc/profile

Install Apache Spark

[root@hdm01 Downloads]# wget https://archive.apache.org/dist/spark/spark-3.0.2/spark-3.0.2-bin-hadoop3.2.tgz

[root@hdm01 Downloads]# cd /opt

[root@hdm01 opt]# tar xvfz spark-3.0.2-bin-hadoop3.2.tgz

[root@hdm01 opt]# ln -sf spark-3.0.2-bin-hadoop3.2 spark

Repeat the above steps for all nodes

[root@hdm01 opt]# forcp /root/Downloads/spark-3.0.2-bin-hadoop3.2.tgz /root/Downloads

[root@hdm01 opt]# fornodes -n 'cd /opt && tar xvfz /root/Downloads/spark-3.0.2-bin-hadoop3.2.tgz'

[root@hdm01 opt]# fornodes -n 'cd /opt && ln -sf spark-3.0.2-bin-hadoop3.2 spark'

[root@hdm01 opt]# fornodes -n 'ls -l /opt'

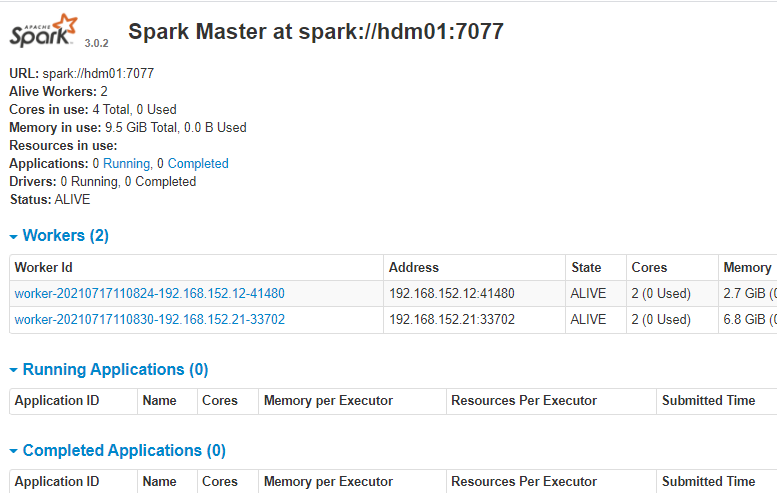

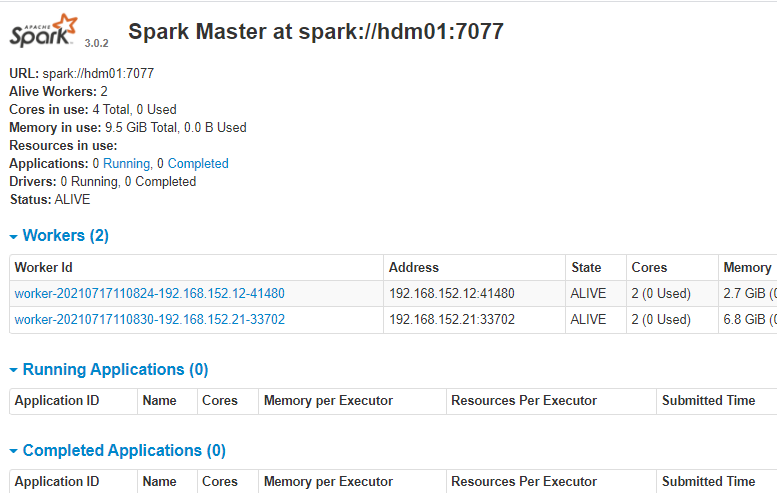

Start Apache Spark

Master Node (hdw02:8080)

[root@hdw02 opt]# cd /opt/spark

[root@hdw02 spark]# cat conf/spark-env.sh

#!/usr/bin/env bash

GPFDIST_PORT="12900, 12901, 12902"

[root@hdw02 spark]# sbin/start-master.sh

starting org.apache.spark.deploy.master.Master,

logging to /opt/spark/logs/spark-root-org.apache.spark.deploy.master.Master-1-hdw02.out hdm01:8080

Worker Nodes (repeat for all worker nodes(hdw01,hdm01))

[root@hdw01 conf]# pwd

/opt/spark/conf

[root@hdw01 conf]# cat spark-env.sh

#!/usr/bin/env bash

GPFDIST_PORT="12900, 12901, 12902"

[root@hdw01 conf]#

[root@hdw01 spark]#sbin/start-slave.sh spark://hdw02:7077

/opt/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-sdw01.out

starting org.apache.spark.deploy.worker.Worker, logging to

History Server (hdw02:18080)

[root@hdw02 ~]# cd /opt/spark

[root@hdw02 spark]# mkdir /tmp/spark-events

[root@hdw02 spark]# chmod 777 /tmp/spark-events/

#Add the following to /opt/spark/conf/spark-defaults.conf

spark.eventLog.enabled true

spark.history.fs.logDirectory file:///tmp/spark-events

spark.eventLog.dir file:///tmp/spark-events

#Start History server

[root@hdw02 spark]# sbin/start-history-server.sh

starting org.apache.spark.deploy.history.HistoryServer, logging to

/opt/spark/logs/spark-root-org.apache.spark.deploy.history.HistoryServer-1-hdm01.out

Spark monitoring http://hdm01:8080