Administration Guide

Thank you so much for download Hyperscale Data Appliance software.

- Version: 2.0

- Author: Brady Chang

- Created: 8 April, 2022

- Update: 24 August, 2023

Hyperscale Data Appliance(HDA) is a self-contained data warehouse solution that integrates

all of the database software, servers, and switches neccessary to perform big data analytics.

The HDA is a turn-key, easy to install data warehouse solution that provides extreme query

and loading performance for analyzing large data sets. The HDA integrates Greenplum

database, data loading, and Apache Spark software with compute, storage and network

components; delivered racked and ready for immediate data loading and query execution

The Greenplum HDA is built from modules - Greenplum Database (GPDB) and Apache Spark

These modules consist of four servers with different software configurations.

Greenplum Database (GPDB) modules

GPDB modules are included in every HDA as the first module.

GPDB modules are available in a standard and high capacity configuration. The standard

configuration offers a mix of high performance and storage, while the capacity

configuration offers a large storage capacity while maintaining fast performance.

If you have any questions that are beyond the scope of this documentation, Please feel free to

email support@hyperscale.io

Operating System Parameters

The configuration changes must be propagated to all nodes.

/etc/sysctl.conf

kernel.shmmax = 500000000

kernel.shmmni = 4096

kernel.shmall = 4000000000

fs.file-max=65535

From Bash change /etc/security/limits.conf to...

root - nproc 65535

root - nofile 65535

gpadmin - nproc 65535

gpadmin - nofile 65535

grubby --update-kernel=ALL --args="elevator=deadline"

/data xfs rw,nodev,noatime,nobarrier,inode64 0 0

systemctl stop firewalld

systemctl disable firewalld

systemctl stop bluetooth.service

systemctl disable bluetooth.service

Only on CentOs7.9

[root@mdw ~]# yum install -y libcgroup

[root@mdw ~]# yum install -y libcgroup-tools

[root@mdw ~]# fornodes -n 'yum install -y libcgroup'

[root@mdw ~]# fornodes -n 'yum install -y libcgroup-tools'

[root@mdw ~]# systemctl enable cgconfig

[root@mdw ~]# systemctl start cgconfig

reboot

echo 'kernel.nmi_watchdog=0' >> /etc/sysctl.conf

sysctl -p

To permanently disable nmi_watchdog: modify /etc/default/grub

[root@mdw bin]# cat /etc/default/grub

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR="$(sed 's, release .*$,,g' /etc/system-release)"

GRUB_DEFAULT=saved

GRUB_DISABLE_SUBMENU=true

GRUB_TERMINAL_OUTPUT="console"

GRUB_CMDLINE_LINUX_DEFAULT="rhgb quiet nmi_watchdog=0"

GRUB_DISABLE_RECOVERY="true"

systemctl stop NetworkManager

systemctl disable NetworkManager

systemctl list-unit-files | grep NetworkManager

Add the below parameter in /etc/sysconfig/network-scripts/ifcfg-ens34

NM_CONTROLLED="no"

Enabled and Restart Network service

systemctl enable network

systemctl restart network

Change ClientAliveInterval to 0 in /etc/ssh/sshd_cofnig

ClientAliveInterval 0

Restart ssh daemon: systemctl restart sshd

Configure Windows Client

Enable UbuntuStart PowerShell: Enter powershell in windows [search field ]

PS C:\Users\brady> wsl --install -d Ubuntu

Start Ubuntu

PS C:\Users\brady> ubuntu.exe

gpadmin@LAPTOP-928RP2RO:~

Add hostname resolution

c:\windows\System32\drivers\etc\hosts with notepad:

C:\Users\xxxxx>notepad c:\windows\System32\drivers\etc\hosts

C:\Users\xxxxx>type c:\windows\System32\drivers\etc\host

. . .

192.168.88.168 hdm01

192.168.88.101 mdw

192.168.88.11 hdw01 sdw01

192.168.88.12 hdw02 sdw02

192.168.88.13 hdw03 sdw03

167.71.100.113 hsio

143.198.106.44 hsiobe

# End of section

Configure WiFi

[root@hdw02 ~]# nmtui

*****select Activate a connection*****

┌─┤ NetworkManager TUI ├──┐

│ Please select an option │

│ Edit a connection │

│ Activate a connection │

│ Set system hostname │

│ Quit │

└───────────────────┘

Configure Central Logging on mdw

Syslog Server Setup

Uncomment the following to enable the syslog server to listen on the UDP and TCP protocol.

[root@mdw etc]# vi /etc/rsyslog.conf

# Provides UDP syslog reception

$ModLoad imudp

$UDPServerRun 514

# Provides TCP syslog reception

$ModLoad imtcp

$InputTCPServerRun 514

[root@mdw ~]# systemctl restart rsyslog

Syslog Client Setup

At the end of the file /etc/rsyslog.conf place following line at the end

*.* @@192.168.88.101:514

[root@hdw01 ~]# systemctl restart rsyslog

view logs from Greenplum external table command

(see Greenplum - External Tables section)

[gpadmin@mdw scripts]$ pwd

/opt/greenplum/scripts

[gpadmin@mdw scripts]$ ls -l

total 24

-rw-r--r--. 1 gpadmin gpadmin 221 Sep 24 12:11 select_var_log_messages.all.sql

-rw-r--r-- 1 gpadmin gpadmin 239 Oct 13 12:50 select_var_log_messages.hdm01.sql

-rw-r--r--. 1 gpadmin gpadmin 221 Sep 24 12:11 select_var_log_messages.hdw.sql

-rw-r--r-- 1 gpadmin gpadmin 239 Oct 13 15:00 select_var_log_messages.mdw.sql

-rw-r--r--. 1 gpadmin gpadmin 221 Sep 24 12:11 select_var_log_messages.sql

-rwxr-xr-x 1 gpadmin gpadmin 570 Oct 13 15:00 view_logs

[gpadmin@mdw scripts]$ cat view_logs

#!/bin/bash

usage()

{

cat << EOF

usage: bash ./ -n host_name

-m master

-w worker

-a all

-h Brings up this menu

EOF

}

case $1 in

-m)

shift

psql -d ecom -f select_var_log_messages.mdw.sql

;;

-s)

shift

psql -d ecom -f select_var_log_messages.hdm01.sql

;;

-w)

shift

psql -d ecom -f select_var_log_messages.hdw.sql

;;

-a)

shift

psql -d ecom -f select_var_log_messages.all.sql

;;

*) usage

exit 1

esac

HDA Log Maintenace

archived logs that occupy space

[root@mdw bin]# pwd

/root/bin

[root@mdw bin]# cat clean_logs

cat /dev/null > /usr/local/greenplum-cc/logs/agent.log

cat /dev/null > /usr/local/greenplum-cc/logs/webserver.log

rm -f /usr/local/greenplum-cc/logs/webserver.*.log

rm -f /var/log/vmware-vmsvc-root.*.log

rm -f /var/log/secure-20220*

cat /dev/null > /var/log/vmware-vmsvc-root.log

rm -f /home/gpadmin/gpAdminLogs/gpgetstatususingtransition.*

rm -f /home/gpadmin/gpAdminLogs/gpstate_*

rm -f /home/gpadmin/gpAdminLogs/gpstart_*

rm -f /home/gpadmin/gpAdminLogs/gpstop_*

rm -f /home/gpadmin/gpAdminLogs/gpinitsystem_2*

rm -f /home/gpadmin/gpAdminLogs/gpconfig_2*

rm -f /home/gpadmin/gpAdminLogs/gpperfmon_install_2*

rm -f /home/gpadmin/gpAdminLogs/backout_gpinitsystem_gpadmin_*

rm -f /home/gpadmin/gpAdminLogs/gppkg_2*

[root@hdm01 bin]#

Install DHCP server on mdw

(enable for pxeboot ONLY)

(M)aster (D)ata (W)orker (192.168.88.101)

[root@mdw ~]# rpm -aq |grep -i dhcp

dhcp-4.2.5-82.el7.centos.x86_64

dhcp-libs-4.2.5-82.el7.centos.x86_64

dhcp-common-4.2.5-82.el7.centos.x86_64

[root@mdw dhcp]# pwd

/etc/dhcp

[root@mdw ~]# cat /etc/dhcp/dhcpd.conf

#

# DHCP Server Configuration file.

# see /usr/share/doc/dhcp*/dhcpd.conf.example

# see dhcpd.conf(5) man page

ddns-update-style interim;

ignore client-updates;

authoritative;

allow booting;

allow bootp;

allow unknown-clients;

subnet 192.168.88.0 netmask 255.255.255.0 {

range 192.168.88.11 192.168.88.99;

#option routers 192.168.88.1;

option subnet-mask 255.255.255.0;

option domain-name-servers 8.8.8.8;

default-lease-time 36000;

max-lease-time 48000;

# IP of PXE Server

next-server 192.168.88.101;

filename "pxelinux.0";

host hdw01 {

option host-name "hdw01";

hardware ethernet 00:0c:29:cf:b2:17;

fixed-address 192.168.88.11;

}

host hdw02 {

option host-name "hdw02";

hardware ethernet 00:0c:29:12:8e:f5;

fixed-address 192.168.88.12;

}

}

Install Packages and create yum repo

Make CentOS bootable USB

Plug usb into hdm01

dd bs=4M if=/root/Downloads/CentOS-7-x86_64-DVD-2009.iso of=/dev/sdb \

status=progress oflag=sync

. . .

server {

listen 80;

listen [::]:80;

server_name _;

root /usr/share/nginx/html;

. . .

add the following to /etc/nginx/nginx.conf

location / {

autoindex on;

autoindex_exact_size on;

}

create local Centos7.9 Repo on hdw02

physical location: /opt/repo

Create /etc/yum.repos.d/centos7local.repo

[root@mdw yum.repos.d]# cat centos7local.repo

[CentOS7-Repo]

name=CentOS7 Server

baseurl=file:///opt/repo

enabled=1

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

create Centos7.9 Repo on mdw

physical location: /var/www/html/centos79

[root@mdw root]# pwd

/run/media/root

Create /etc/yum.repos.d/centos79.repo

[root@mdw yum.repos.d]# cat centos79.repo

[centos79]

name=centos79

baseurl=http://192.168.88.101:80/centos79

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

create HTTP Greenplum Repo on mdw

mkdir /var/www/html/greenplum

mv /tmp/greenplum-db-6.21.3.1-rhel7-x86_64.rpm \

/var/www/html/greenplum

[root@mdw html]# cd /etc/yum.repos.d/

[root@mdw yum.repos.d]# cat greenplum.repo

[greenplum]

name=greenplum

baseurl=http://192.168.88.101:80/greenplum

enabled=1

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

createrepo /var/www/html/greenplum/

[root@mdw yum.repos.d]# createrepo --update

[root@mdw greenplum]# yum list

[root@mdw greenplum]# yum makecache fast

[root@hdm01 yum.repos.d]# yum list all |grep -i green

greenplum-db-6-6.21.3-1.el7.x86_64

Master Node only

yum install -y gcc nginx screen pdsh pssh

source /etc/profile

wget http://www.scala-lang.org/files/archive/scala-2.12.0.tgz

tar xvf scala-2.12.0.tgz

mv scala-2.12.0 /usr/lib

ln -s /usr/lib/scala-2.12.0 /usr/lib/scala

echo "export PATH=$PATH:/usr/lib/scala/bin" >> /etc/profile

source /etc/profile

scala -version

Install rsyslog

[root@hdm01 yum.repos.d]# wget http://rpms.adiscon.com/v8-stable-daily/rsyslog-daily.repo

[root@hdm01 yum.repos.d]# yum install rsyslog

Install pdsh (centos 7)

[root@hsiobe yum.repos.d]# yum install epel-release

###Make sure it's enabled by examining /etc/yum.repo.d/epel.repo

[root@hsiobe yum.repos.d]# grep -i enable epel.repo

enabled=1

enabled=1

enabled=1

[root@hsiobe yum.repos.d]# yum install pdsh

create Centos 8.2 Repo on hdm01

[root@hdm01 yum.repos.d]# cat rhel8.repo

[BaseOS]

name=Red Hat Enterprise Linux 8 - BaseOS

metadata_expire=-1

gpgcheck=0

enabled=1

baseurl=file:///var/www/html/centos82

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[AppStream]

name=Red Hat Enterprise Linux 8 - AppStream

metadata_expire=-1

gpgcheck=0

enabled=1

baseurl=file:///var/www/html/centos82-stream/

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[root@hdm01 yum.repos.d]# yum list |grep -i pdsh

pdsh.x86_64 2.34-5.el8 @BaseOS

pdsh-rcmd-ssh.x86_64 2.34-5.el8 @AppStream

Install pdsh (centos 8)

[root@hdm01 yum.repos.d]# yum install pdsh.x86_64

Install vsftpd (NOT USED)

[root@hdm01 base]# pwd

/var/www/html/repos/base

[root@hdm01 base]# wget http://mirror.centos.org/centos/7/os/x86_64/Packages/

vsftpd-3.0.2-28.el7.x86_64.rpm

ftpuser:gpadmin password: xxxxxxxx

install winscp

Download and Install https://winscp.net/download/WinSCP-5.19.6-Setup.exe

Connect hdm01 with winscp

Install Logstash (NOT USED)

[root@mdw ~]# rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

[root@mdw ~]# cat /etc/yum.repos.d/logstash.repo

[logstash-8.x]

name=Elastic repository for 8.x packages

baseurl=https://artifacts.elastic.co/packages/8.x/yum

gpgcheck=0

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

[root@mdw ~]# yum install logstash

[root@mdw ~]# systemctl enable logstash.service

Created symlink from /etc/systemd/system/multi-user.target.wants/logstash.service to

/etc/systemd/system/logstash.service.

[root@mdw ~]# systemctl start logstash.service

(END OF NOT USED)

upgrade to GNOME Desktop at your expense

yum groupinstall "GNOME Desktop

systemctl set-default graphical.target

reboot

HDA Cluster Commands

Disable host key checking

create .ssh/config file and add the Host, StrictHostKeyChecking

[gpadmin@hdm01 ~]$ cat .ssh/config

Host *

StrictHostKeyChecking no

[gpadmin@hdm01 ~]$ chmod 600 .ssh/config

Make gpadmin root privilege without password

[root@hdm01 etc]# ls /etc/sudoers

/etc/sudoers

## Same thing without a password

# %wheel ALL=(ALL) NOPASSWD: ALL

gpadmin ALL=(ALL) NOPASSWD: ALL

**********Try it out**********

[gpadmin@hdw01 ~]$ whoami

gpadmin

[gpadmin@hdw01 ~]$ pwd

/home/gpadmin

[gpadmin@hdw01 ~]$ sudo su -

Last login: Tue Apr 12 11:21:16 PDT 2022 on pts/2

[root@hdw01 ~]#

[root@hyperscale bin]# pwd

/root

fornodes - HDA CentOS 8.2 version

[root@hyperscale bin]# pwd

/root/bin

[gpadmin@hdm01 ~]$ cat /usr/bin/fornodes

usage="$(basename "$0") [-h] [-n][-a] command

where:

-h show this help text

-n execute comand on nodes(/etc/nodes)

-a execute command on all nodes(/etc/nodes.all)

command(s) enclose multiple commands in double quotes

example: fornodes -a \"echo 'hello'; echo 'hello again'\" "

echo "=========================================="

case $1 in

-n | --nodes )

shift

/usr/bin/pdsh -w ^/etc/nodes.worker $1

;;

-a | --all )

shift

/usr/bin/pdsh -w ^/etc/nodes.all $1

;;

* ) echo "$usage"

echo "=========================================="

echo " "

exit 1

esac

echo "=========================================="

echo " "

[root@hdm01 ~]# fornodes -n uptime

=================================================

hdw01: 15:16:01 up 22 days, 20:25, 0 users, load average: 0.00, 0.01, 0.05

hdw02: 15:16:31 up 23 days, 6:31, 2 users, load average: 0.33, 0.22, 0.23

==================================================

fornodes - single node instance

[root@hyperscale ~]# cat /etc/hosts

127.0.0.1 localhost

167.71.100.113 hyperscale.io lab.hyperscale.io hdm01 hdw01 hdw02

198.58.109.36 hsiobackup #linode

# The following lines are desirable for IPv6 capable hosts

::1 hyperscale.io hyperscale

[root@hdm01 bin]# cat /usr/bin/fornodes

for host in $(/root/bin/readhosts ); do echo $host; ssh $host $1; done

[root@hyperscale bin]# cat readhosts

input="/etc/nodes"

command="$1"

#echo $command

while IFS= read -r line

do

echo $line

done < "$input"

forcp

[root@hdm01 bin]# cat /usr/bin/forcp

set -e

ARGC=$#

display_usage() {

echo " ==================USAGE================="

echo " hostfile = /etc/nodes "

echo " $0 SOURCE DEST"

echo " ----------------------------------------"

echo " SOURCE:file/directory "

echo " DEST:file/directory"

}

usage="$(basename "$0") [-h][-r n] -- program to copy files

where:

-h show this help text

-r recursive "

while getopts 'r:h' option; do

case "$option" in

h | --help)

display_usage

echo ""

echo "$usage"

ex=1

exit 0

;;

r | --recurs)

OPTS='-r'

SRC=$2

DEST=$3

;;

esac

done

if [ -z $DEST ]; then

SRC=$1

DEST=$2

fi

if [[ "$ex" == 1 ]]; then

exit 0

fi

for i in `cat /etc/nodes`

do

echo " "

if [ -z $SRC ]; then

display_usage

exit

fi

if [ -d $SRC ]; then

ssh $i mkdir -p $SRC

fi

echo "#####rsync $OPTS $SRC $i:/$DEST"

rsync -av -e ssh --exclude='.*' $SRC $i:$DEST

#scp $OPTS $SRC $i:$DEST

done

Example: copy file /tmp /casper3 to /tmp on all nodes

[root@hdm01 bin]# forcp /tmp/casper3 /tmp

Download package with yum

[root@hdm01 ~]# [root@hdm01 ~]# yumdownloader pdsh*

Installation

[root@hdm01 ~]# yum install epel-release

[root@hdm01 ~]# yum install ansible

[root@hdm01 ~]# rpm -ql ansible

Modify /etc/ansible/ansible.cfg inventory path to reflect the hosts file

You can specify a different inventory file using the -i

[root@hdm01 ansible]# ansible -i /etc/ansible/hosts all -m ping

##### Using the default config file in /etc/ansible/ansible.cfg #####

[root@hdm01 ansible]# ansible all -m ping

[root@hdm01 ~]# mkdir ansible

[root@hdm01 ~]# cd ansible/

[root@hdm01 ansible]# cat inventory

hdm01

mdw

hdw01

hdw02

Examples:

[root@hdm01 ~]# ansible -m shell -a 'free -m' all

[root@hdm01 ~]# ansible -m shell -a 'rpm -aq |grep -i ansible' all

[root@hdm01 ~]# ansible -m shell -a 'ls /tmp' all

[root@hdm01 ~]# ansible -i /etc/ansible/hosts -m copy -a "src=/tmp/up2 dest=/tmp" all

Backup Directories

[root@hdm01 opt]# pwd

/opt

rsync -avh flask/ root@hyperscale.io:/opt/backup/hdm01/opt/flask

Install shell in a box

[root@mdw ~]# wget https://download-ib01.fedoraproject.org/pub/epel/7/x86_64/Packages/s/shellinabox-2.20-5.el7.x86_64.rpm

[root@mdw ~]# yum install -y shellinabox-2.20-5.el7.x86_64.rpm

[root@mdw ~]# systemctl enable shellinaboxd

[root@mdw ~]# systemctl start shellinaboxd

Open the browser and navigate to https://host_ip:4200

Install, Configure, and Deploy Flask

[root@hdm01 ~]# cd ~/Downloads/Flask-Migragte-3.0.1

[root@hdm01 Flask-Migrate-3.0.1]# python3 setup.py install

[root@hdm01 Flask-Migrate-3.0.1]# cd ../

[root@hdm01 Downloads]# wget Flask-SQLAlchemy-2.5.1.tar.gz

[root@hdm01 Downloads]# tar xvfz Flask-SQLAlchemy-2.5.1.tar.gz

[root@hdm01 Downloads]# cd Flask-SQLAlchemy-2.5.1/

[root@hdm01 Flask-SQLAlchemy-2.5.1]# python3 setup.py install

[root@hdm01 Flask-SQLAlchemy-2.5.1]# cd ../

[root@hdm01 Downloads]# wget Flask-Mail

[root@hdm01 Downloads]# tar xvfz Flask-Mail-0.9.1.tar.gz

[root@hdm01 Downloads]# cd flask-mail

[root@hdm01 flask-mail]# python -V

Python 3.6.8

[root@hdm01 flask-mail]# python setup.py install

Complete software stack displayed by pip3 list

[root@hdm01 Downloads]# pip3 list |grep -i flask

Flask (2.0.1)

Flask-Cache (0.13.1)

Flask-HTTPAuth (4.5.0)

Flask-Mail (0.9.1)

Flask-Migrate (3.0.1)

Flask-RESTful (0.3.10)

Flask-Session (0.4.0)

Flask-SQLAlchemy (2.5.1)

Flask-SSLify (0.1.5)

Flask-WTF (1.0.0)

Deploy Hyper Lab

[root@mdw hyperlab]# pwd

/opt/flask/hyperlab

[root@mdw hyperlab]# nohup /opt/flask/hyperlab/reloadme

Deploy hsio web

[root@mdw web]# pwd

/opt/flask/web

[root@hyperscale web]# cat app.py

from flask import Flask, render_template

import subprocess

app = Flask(__name__)

@app.route("/")

def main():

return render_template('index.html')

@app.route("/about/")

def about():

return render_template('hyperlab.html')

@app.route("/name/

def get_book_name(name):

return "name : {}".format(name)

@app.route("/details")

def get_book_details():

author=request.args.get('author')

published=request.args.get('published')

return "Author : {}, Published: {}".format(author,published)

if __name__ == '__main__':

website_url = 'lab.hyperscale.io:5000'

appconfig['SERVER_NAME'] = website_url

app.run(debug=True)

[root@mdw web]# export FLASK_APP=app.py

[root@mdw web]# nohup python3 -m flask run -h 192.168.152.111 &

TBD

Install SQLAlchemy 1.4.40

Download SQLAlchemy-1.4.40.tar.gz from pypi.org

[root@hdm01 Downloads]# tar xvfz SQLAlchemy-1.4.40.tar.gz

[root@hdm01 Downloads]# cd SQLAlchemy-1.4.40/

[root@hdm01 SQLAlchemy-1.4.40]# cat /usr/bin/p3

cd /usr/bin; ln -sf /usr//bin/python3.6 python

cd /usr/bin; ln -sf /usr/bin/pip3.6 pip3

[root@hdm01 SQLAlchemy-1.4.40]# /usr/bin/p3

[root@hdm01 SQLAlchemy-1.4.40]# python setup.py install

#Test the installation

[root@hdm01 SQLAlchemy-1.4.40]# python

Python 3.6.8 (default, Sep 25 2021, 23:57:19)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-44)] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import sqlalchemy

>>> sqlalchemy.__version__

'1.4.34'

>>>

Install PHP -

make sure you are using python 2.7.5

[root@hdm01] yum install epel-release yum-utils

[root@hdm01] yum install http://rpms.remirepo.net/enterprise/remi-release-7.rpm

[root@hdm01]# yum-config-manager --enable remi-php73

[root@hdm01]# yum install php php-common php-opcache php-mcrypt php-cli \

[root@hdm01]# php-gd php-curl php-mysqlnd

[root@hdm01]# yum install php-pgsql

[root@hdm01]# php -m |grep pdo_pgsql

[root@hdm01 ecom]# php -r "copy('https://getcomposer.org/installer', 'composer-setup.php');"

[root@hdm01 ecom]# php composer-setup.php --install-dir=/usr/local/bin --filename=composer

#php interactive shell to test Greenplum connectivity

[root@hdm01]# php -a

Execute PHP script

[root@hdm01 ecom]# pwd

/opt/flask/ecom

[root@hdm01 ecom]# cat customer.php

<?php

$conn = pg_connect("host=192.168.152.111 dbname=ecom user=gpadmin

password=xxxxxxxx");

$query = "select * from customer";

$rs = pg_query($conn, $query) or die ("cannot execute query: $query\n");

print "customer id customer name customer type\n";

while ($row = pg_fetch_row($rs)){

echo "$row[0] $row[1] $row[2] \n";

}

?>

[root@hdm01 ecom]# php -f customer.php

customer id customer name customer type

1 Hyperscale IO 2

2 Casper Inc 2

3 Max 1

Ansible -

Installation on RHEL 8.2

Download Ansible 2.8.1 from https://releases.ansible.com/ansible

PreReq: jinja2 PyYAML cryptography

[root@hdm01 Downloads]# tar xvfz ansible-2.8.1.tar.gz

[root@hdm01 Downloads]# cd ansible-2.8.1/

[root@hdm01 ansible-2.8.1]# python3 setup.py install

Disable Warnings in /etc/ansible/ansible.cfg

[root@hdm01 ansible]# cat /etc/ansible/ansible.cfg

[defaults]

DEVEL_WARNING=False

ANSIBLE_COMMAND_WARNINGS=false

ACTION_WARNING=False

ANSIBLE_PYTHON_INTERPRETER=auto_silent

Install JDK -

Oracle JDK 1.7(all nodes)

[root@hdm01 Downloads]# wget https://download.oracle.com/java/17/latest/jdk-17_linux-x64_bin.rpmInstall and Configure pxeboot

Install and configure pxe-boot -

configure Apache Httpd on mdw((H)yperscale (D)ata (M)aster 2

[root@mdw~]# vi /etc/httpd/conf/httpd.conf

DocumentRoot "/var/www/html"

<Directory "/var/www">

Options Indexes FollowSymLinks

AllowOverride None

Require all granted

<Directory>

Install required packages on mdw((H)yperscale (D)ata (M)aster 2

[root@mdw~]# yum install -y dhcp tftp tftp-server syslinux vsftpd xinetd

[root@mdw~]# mkdir /tftpboot

Copy required network boot files to ‘/tftpboot’

[root@mdw xinetd.d]# cp -v /usr/share/syslinux/pxelinux.0 /tftpboot/

[root@mdw xinetd.d]# cp -v /usr/share/syslinux/menu.c32 /tftpboot

[root@mdw xinetd.d]# cp -v /usr/share/syslinux/memdisk /tftpboot

[root@mdw xinetd.d]# cp -v /usr/share/syslinux/mboot.c32 /tftpboot

[root@mdw xinetd.d]# cp -v /usr/share/syslinux/chain.c32 /tftpboot

[root@mdw xinetd.d]# mkdir /tftpboot/pxelinux.cfg

[root@mdw xinetd.d]# mkdir /tftpboot/networkboot

#test tftpboot connection from hdm01

[root@hdm01 ~]# tftp mdw

tftp> verbose

Verbose mode on.

tftp> get test.txt

getting from mdw:test.txt to test.txt [netascii]

Received 5 bytes in 0.1 seconds [342 bit/s]

#ctrol-d to exit tftp prompt

tftp>

cat /tftpboot/pxelinux.cfg/default

default menu.c32

prompt 0

timeout 30

MENU TITLE Hyperscale.io transformer- install Menu

LABEL hdw

MENU LABEL hdw (Hyperscale work node)

KERNEL /networkboot/vmlinuz

APPEND initrd=/networkboot/initrd.img inst.repo=http://192.168.88.101/centos79 \

ks=http://192.168.88.101/centos79/hdw.cfg hostname=hdw03 text

add node_macs to mdw:/data/centos79

[root@mdw centos79]# cat node_macs

00:0c:29:cf:b2:17 hdw01

00:0c:29:12:8e:f5 hdw02

00:0c:29:40:71:66 hdw03

00:0e:c6:68:99:ca mdw

00:0c:29:d4:64:f2 hdm01

add hdw_skel.tgz to mdw:/data/centos79 Hyperscale Data Node

Greenplum

Greenplum documentation

Install Greenplum Database

[root@mdw Download]# yum install -y /root/Download/greenplum-db-6.21.3.rhel7-x86_64.rpm

[root@mdw Download]# forcp /root/Downloads/greenplum-db-6.21.3.rhel7-x86_64.rpm /tmp

[root@mdw Download]# fornodes -n 'yum install -y /tmp/greenplum-db-6.21.3.rhel7.x86_64.rpm'

Add the following to gpadmin's .bashrc

------------------------------------------------------------

source /opt/greenplum/greenplum-cc/gpcc_path.sh

export LD_PRELOAD=/lib64/libz.so.1 ps

source /usr/local/greenplum-db/greenplum_path.sh

export MASTER_DATA_DIRECTORY=/data/master/gpseg-1

source /usr/local/greenplum-cc/gpcc_path.sh

------------------------------------------------------------

[gpadmin@mdw ~] cp $GPHOME/docs/cli_help/gpconfigs/gpinitsystem_config \

/home/gpadmin/gpconfigs/gpinitsystem_config

# Modify the following parameters in gpinitsystem_config:

declare -a DATA_DIRECTORY=(/data/primary)

MACHINE_LIST_FILE=/home/gpadmin/gpconfigs/hostfile

[root@mdw ~]# mkdir /data/primary

[root@mdw ~]# mkdir /data/master

[root@mdw ~]# fornodes -n 'mkdir -p /data/primary'

[root@mdw ~]# fornodes -n 'chown -R gpadmin:gpadmin /data/'

[root@mdw ~]# chown -R gpadmin:gpadmin /data

[root@mdw ~]# su - gpadmin

[gpadmin@mdw~]# gpinitsystem -c gpconfigs/gpinitsystem_config -h gpconfigs/hostfile

Single Node GPDB

[gpadmin@hsiobe ~]$cat /etc/hosts143.198.106.44 hsiobe # hsio backend database and middleware

143.198.106.44 hdm01

143.198.106.44 hdw01

143.198.106.44 hdw02

#Enable passwordless ssh by adding ~/.ssh/id_dsa.pub to ~/.ssh/authorized_kyes

[gpadmin@hsiobe ~]$ fornodes -a uptime

Sun Jun 12 20:17:34 UTC 2022

===========================================

hdm01: 20:17:34 up 15:11, 3 users, load average: 0.16, 0.05, 0.06

hdw01: 20:17:34 up 15:11, 3 users, load average: 0.16, 0.05, 0.06

hdw02: 20:17:34 up 15:11, 3 users, load average: 0.16, 0.05, 0.06

===========================================

[gpadmin@mdw ~]$ pwd

/home/gpadmin

[gpadmin@mdw ~]$ cat gpconfigs/hostfile

hdw01

hdw02

[gpadmin@mdw ~]$ cat gpconfigs/gpinitsystem_config

. . .

declare -a DATA_DIRECTORY=(/data/primary1 /data/primary2)

#### OS-configured hostname or IP address of the master host.

MASTER_HOSTNAME=mdw

#### File system location where the master data directory

#### will be created.

MASTER_DIRECTORY=/data/master

. . .

#initialize instance

[gpadmin@mdw ~]$gpinitsystem -c gpconfigs/gpinitsystem_config -h gpconfigs/hostfile

#add the folowing to pg_hba and restart

[gpadmin@mdw ~]$ cat /data/master/gpseg-1/pg_hba.conf

local all hsio md5

host all hsio 192.168.88.0/24 md5

Enable mirroring

[gpadmin@hdm01~]# gpaddmirrorsEnter mirror segment data directory location 1 of 1 >

/data/mirror

. . .

[INFO]:-Data synchronization will continue in the background.

Use gpstate -s to check the resynchronization progress.

Install SQLAlchemy 1.4.31

Download SQLAlchemy-1.4.40.tar.gz

[root@hdm01 Downloads]# tar xvfz SQLAlchemy-1.4.31.tar.gz

[root@hdm01 Downloads]# cd SQLAlchemy-1.4.31/

[root@hdm01 SQLAlchemy-1.4.31]# python setup.py install

#Test the installation

[root@hdm01 SQLAlchemy-1.4.31]# python

Python 3.9.7 (default, Sep 25 2021, 23:57:19)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-44)] on linux

Type "help", "copyright", "credits" or "license" for more information.

Install PHP -

make sure you are using python 2.7.5

[root@hdm01] yum install epel-release yum-utils

[root@hdm01] yum install http://rpms.remirepo.net/enterprise/remi-release-7.rpm

[root@hdm01]# yum-config-manager --enable remi-php73

[root@hdm01]# yum install php php-common php-opcache php-mcrypt php-cli \

[root@hdm01]# php-gd php-curl php-mysqlnd

[root@hdm01]# yum install php-pgsql

[root@hdm01]# php -m |grep pdo_pgsql

[root@hdm01 ecom]# php -r "copy('https://getcomposer.org/installer', 'composer-setup.php');"

[root@hdm01 ecom]# php composer-setup.php --install-dir=/usr/local/bin --filename=composer

#php interactive shell to test Greenplum connectivity

[root@hdm01]# php -a

Execute PHP script

[root@hdm01 ecom]# pwd

/opt/flask/ecom

[root@hdm01 ecom]# cat customer.php

<?php

$conn = pg_connect("host=192.168.152.111 dbname=ecom user=gpadmin

password=xxxxxxxx");

$query = "select * from customer";

$rs = pg_query($conn, $query) or die ("cannot execute query: $query\n");

print "customer id customer name customer type\n";

while ($row = pg_fetch_row($rs)){

echo "$row[0] $row[1] $row[2] \n";

}

?>

[root@hdm01 ecom]# php -f customer.php

customer id customer name customer type

1 Hyperscale IO 2

2 Casper Inc 2

3 Max 1

Install and Configure pxeboot

Install and configure pxe-boot -

Install required packages

[root@hdm01 ~]# yum install -y dhcp tftp tftp-server syslinux vsftpd xinetd

Copy required network boot files in ‘/var/lib/tftpboot/’

[root@hdm01 xinetd.d]# cp -v /usr/share/syslinux/pxelinux.0 /var/lib/tftpboot/

[root@hdm01 xinetd.d]# cp -v /usr/share/syslinux/menu.c32 /var/lib/tftpboot

[root@hdm01 xinetd.d]# cp -v /usr/share/syslinux/memdisk /var/lib/tftpboot

[root@hdm01 xinetd.d]# cp -v /usr/share/syslinux/mboot.c32 /var/lib/tftpboot

[root@hdm01 xinetd.d]# cp -v /usr/share/syslinux/chain.c32 /var/lib/tftpboot

[root@hdm01 xinetd.d]# mkdir /var/lib/tftpboot/pxelinux.cfg

[root@hdm01 xinetd.d]# mkdir /var/lib/tftpboot/networkboot

Greenplum

Greenplum documentation

Install Greenplum Database

Download gpdb from https://github.com/greenplum-db/gpdb/releases/tag/6.18.2-rhel7-x86_64.rpm

[root@hdm01 greenplum]# yum install -y \

/tmp/open-source-greenplum-db-6.18.2-rhel7-x86_64.rpm

[root@hdm01 greenplum]# forcp /tmp/open-source-greenplum-db-6.18.2-rhel7-x86_64.rpm /tmp

[root@hdm01 greenplum]# fornodes -n 'yum install -y open-source-greenplum-db-6.18.2-rhel7-x86_64.rpm'

Add the following to gpadmin's .bashrc

------------------------------------------------------------

source /opt/greenplum/greenplum-cc/gpcc_path.sh

export LD_PRELOAD=/lib64/libz.so.1 ps

source /usr/local/greenplum-db/greenplum_path.sh

export MASTER_DATA_DIRECTORY=/data/master/gpseg-1

source /usr/local/greenplum-cc/gpcc_path.sh

------------------------------------------------------------

[gpadmin@hdm01 ~] cp $GPHOME/docs/cli_help/gpconfigs/gpinitsystem_config \

/home/gpadmin/gpconfigs/gpinitsystem_config

# Modify the following parameters in gpinitsystem_config:

declare -a DATA_DIRECTORY=(/data/primary)

MACHINE_LIST_FILE=/home/gpadmin/gpconfigs/hostfile

[root@hdm01 ~]# mkdir /data/primary

[root@hdm01 ~]# mkdir /data/master

[root@hdm01 ~]# fornodes -n 'mkdir -p /data/primary'

[root@hdm01 ~]# fornodes -n 'chown -R gpadmin:gpadmin /data/'

[root@hdm01 ~]# chown -R gpadmin:gpadmin /data

[root@hdm01 ~]# su - gpadmin

[gpadmin@hdm01~]# gpinitsystem -c gpconfigs/gpinitsystem_config -h gpconfigs/hostfile

Enable mirroring

[gpadmin@hdm01~]# gpaddmirrorsEnter mirror segment data directory location 1 of 1 >

/data/mirror

. . .

[INFO]:-Data synchronization will continue in the background.

Use gpstate -s to check the resynchronization progress.

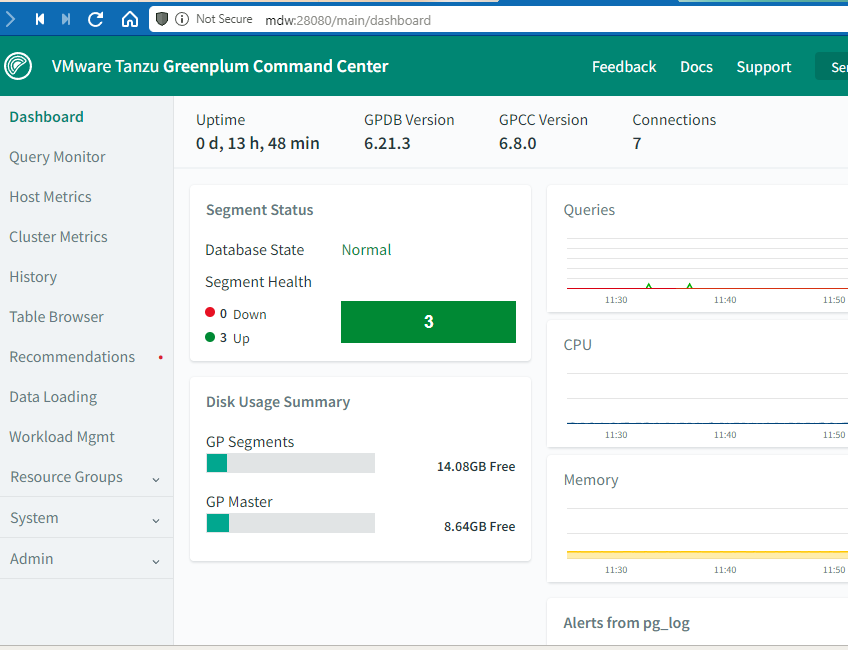

Start Greenplum Command Center

port 28080

[gpadmin@mdw ~]$ cat .pgpass

*:5432:gpperfmon:gpmon:xxxxxxxx

Option 1:

[gpadmin@mdw ~]$ /bin/bash -c 'source /usr/local/greenplum-cc/gpcc_path.sh \

&& /usr/local/greenplum-cc/bin/gpcc start'

Option 2:

[gpadmin@mdw ~]$ source /usr/local/greenplum-cc/gpcc_path.sh

[gpadmin@mdw ~]$ gpcc start

Starting the gpcc agents and webserver...

Agent successfully started on 3/3 hosts

View Greenplum Command Center at http://mdw:28080

Create Hyperscale functions

###### PL/Python #####

psql -d ecom -U gpadmin -c 'CREATE EXTENSION plpythonu;'

###### create pymax as gpadmin#####

CREATE FUNCTION pymax (a integer, b integer)

RETURNS integer

AS $$

if (a is None) or (b is None):

return None

if a > b:

return a

return b

$$ LANGUAGE plpythonu;

grant usage on plpythonu to hsio;

###### from sql editor: as hsio

SELECT ( pymax(123, 43));

pymax|

-------+

123 |

###### customer types from cust_type table #####

-- tid|cust_type_id|cust_type_name

-- ---+------------+---------------

-- 1 | 1 | external

-- 2 | 2 | internal

-- 3 | 3 | prospect

-- 4 | 4 | OEM

-- 5 | 5 | software vendor

###### add customer record function#####

CREATE OR REPLACE FUNCTION public.add_customer(name text, custTypeId integer)

RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

customer_row customer%ROWTYPE;

BEGIN

insert into customer (cust_name,cust_type_id) values(name,custTypeId);

RETURN 0 ;

END;

$function$

Example: add customer: add customer Casper with type_id of 2(internal)

select add_customer('Casper',2)

======================================

###### delete customer record function#####

CREATE OR REPLACE FUNCTION public.del_customer(customerId integer)

RETURNS integer

LANGUAGE plpgsql

AS $function$

declare

custid integer;

customer_row customer%ROWTYPE;

BEGIN

update customer set customer_status =0 where cust_id =customerId;

RETURN 0 ;

END;

$function$

Example: delete customer: with cust_id of 2222(internal)

select del_customer(2222)

======================================

###### add customer attribute (cust_attr) record function #####

CREATE OR REPLACE FUNCTION public.add_cust_attr(cust_id_var integer,attrid integer,

attribute_value_var text)

RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

cust_attr_row cust_attr%ROWTYPE;

BEGIN

insert into cust_attr(cust_id, attr_id, attribute_value) values(cust_id_var, attrid,

attribute_value_var);

RETURN 0 ;

END;

$function$

Example: add customer attribute

cust_id_var integer, attrid integer, attribute_value_var

1=Hyperscale IO 3=office phone 408-781-6849

SELECT add_cust_attr(1,3,'408-781-6849')

======================================

###### add orders record function #####

CREATE OR REPLACE FUNCTION public.add_order(custId integer, orderDesc text)

RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

orders_row orders%ROWTYPE;

BEGIN

insert into orders (cust_id,order_desc) values(custId,orderDesc);

RETURN 0 ;

END;

$function$

Example: add order

custId integer, orderDesc text

SELECT add_order(2,'Casper''s first order')

======================================

###### add order_detail record function #####

CREATE OR REPLACE FUNCTION public.add_order_detail(orderId integer, prodId integer,

orderDetailDesc text)

RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

order_detail order_detail%ROWTYPE;

BEGIN

insert into order_detail (order_id,prod_id,order_detail_desc) values

(orderId,prodId,orderDetailDesc);

RETURN 0 ;

END;

$function$

Example: insert order_detail:

order_id=1, prod_id=101, order_detail_desc

select add_order_detail(1,101,'testing testing testing...')

======================================

###### remove order_detail record function #####

CREATE OR REPLACE FUNCTION public.rm_order_detail(orderDetailId integer)

RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

order_detail order_detail%ROWTYPE;

BEGIN

delete from order_detail where order_detail_id =orderDetailId;

RETURN 0 ;

END;

$function$

Example: insert order_detail:

order_id=1, prod_id=101, order_detail_desc

select add_order_detail(1,101,'testing testing testing...')

======================================

######add product function#######

CREATE OR REPLACE FUNCTION public.add_product(prodName text,

prodDesc text,prodNote text,prodImage text,

prodInStock integer,prodCategory integer, prodPrice NUMERIC(10,2))

RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

product_row products%ROWTYPE;

BEGIN

insert into products (prod_name,prod_description,prod_note,

prod_image,prod_in_stock,prod_categoryid,prod_price) values

(prodName, prodDesc,prodNote,prodImage,prodInStock,prodCategory, prodPrice);

RETURN 0 ;

END;

$function$

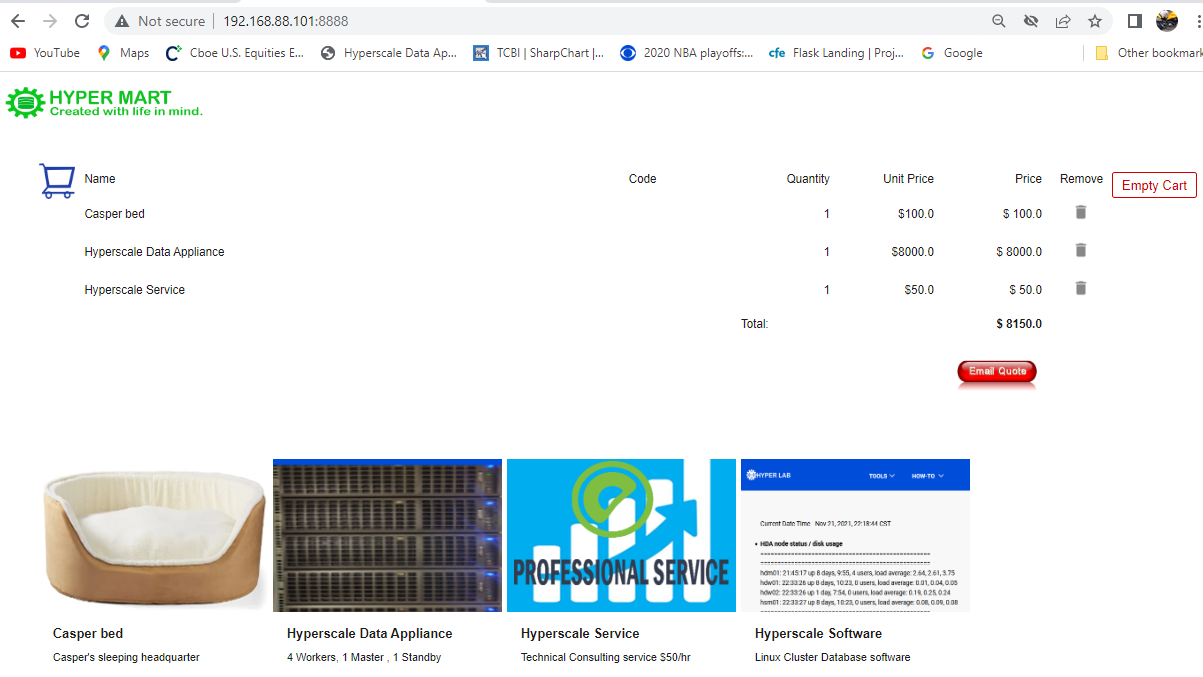

Example: add_product:

select add_product('Casper bed','Casper''s sleeping headquarter','casper note',

'casper.png',1,1,100.00)

======================================

###### add product attribute function #####

CREATE OR REPLACE FUNCTION public.add_product_attr(attrid integer, prodId integer,

attrName text, attrValue text) RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

prod_attr prod_attr%ROWTYPE;

BEGIN

insert into prod_attr(attr_id,prod_id,attr_name,attr_value)

values(attrid,prodId,attrName,attrValue);

RETURN 0 ;

END;

$function$

Example: add_product_attr(attr_id,prod_id,attr_name,attr_value)

select add_product_attr(8,1,'retail price','100.00')

======================================

###### add service function #####

CREATE OR REPLACE FUNCTION public.add_service(orderId integer, prodId integer,

serviceDesc text, serviceTech text, serviceStatus integer

RETURNS intege

LANGUAGE plpgsq

AS $function

DECLAR

service_row service%ROWTYPE

BEGI

insert into service (order_id,prod_id,service_desc,service_tech,status)

values(orderId, prodId,serviceDesc,serviceTech,serviceStatus)

RETURN 0

END

$function

Example: add_service(orderId,prodId,serviceDesc,serviceTech,serviceStatus)

select add_service(1,1,'make bed more comfortable','Brady Chang',1)

======================================

JDBC

See section webtemplate

[root@hdm01 templates]# pip3 list

Package Version

configparser 5.0.2

greenlet 1.1.1

pip 21.2.4

psycopg2-binary 2.9.1

setuptools 57.4.0

SQLAlchemy 1.4.34

sqlalchemy-greenplum 0.1.0

Flask-SQLAlchemy 2.5.1

Flask-Babel 2.5.1

Flask-migrate 2.5.1

Flask 2.5.1

alembic 1.7.3

Babel 2.9.1

wheel 0.37.0

import sqlalchemy as db

from sqlalchemy import create_engine

from sqlalchemy.orm import sessionmaker

def main():

engine = db.create_engine('greenplum://hsio:xxxxxxxx@192.168.88.101/ecom')

con = engine.connect()

metadata=db.MetaData()

users = db.Table('users',metadata, autoload=True,autoload_with=engine)

query = db.select([users])

rsProxy= con.execute(query)

rs=list(rsProxy.fetchall())

for row in rs:

print(row)

rsProxy.close()

if __name__ == '__main__':

main()

DBeaver

Add gpadmin to /data/master/gpseg-1/pg_hba.conf

# TYPE DATABASE USER ADDRESS METHOD

host all gpadmin 192.168.88.102/32 trust

Download Dbeaver from https://dbeaver.io/files/dbeaver-ce-latest-x86_64-setup.exe

Hyperscale Business Model

CREATE SEQUENCE cust_id_serial

INCREMENT BY 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1;

CREATE SEQUENCE cust_type_id_serial

INCREMENT BY 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1;

CREATE SEQUENCE public.attr_id_serial

INCREMENT BY 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1;

CREATE SEQUENCE activity_id_serial

INCREMENT BY 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1;

CREATE TABLE public."attribute" (

attr_id int4 NOT NULL DEFAULT nextval('attr_id_serial'::regclass),

attr_value varchar(30) NULL,

CONSTRAINT attribute_pkey PRIMARY KEY (attr_id)

);

-- public.cust_type definition

-- Drop table

DROP TABLE IF exists public.cust_type;

CREATE TABLE cust_type

(

tid int PRIMARY KEY DEFAULT nextval('cust_type_id_serial'),

cust_type_id int, -- cust_type_id 1 = internal 2 = EXTERNAL 3 = prospect/leads

cust_type_name varchar(15),

cust_type_date timestamp DEFAULT now()

)

-----------------------------------

-- customer

-----------------------------------

drop table if exists customer;

CREATE TABLE public.customer (

cust_id int4 NOT NULL DEFAULT nextval('cust_id_serial'::regclass),

cust_name varchar(30) NULL,

tid int, --FOREIGN KEY TO cust_type.tid

CONSTRAINT customer_pkey PRIMARY KEY (cust_id)

);

ALTER TABLE customer ADD CONSTRAINT cust_tid_fkey FOREIGN KEY (tid)

REFERENCES public."cust_type"(tid);

drop sequence if exists cust_attr_id_serial;

create sequence cust_attr_id_serial;

drop table if exists cust_attr CASCADE;

-----------------------------------

-- cust_attr

-----------------------------------

CREATE TABLE public.cust_attr(

attr_id int4 NOT NULL DEFAULT nextval('cust_attr_id_serial'::regclass),

cust_id int4 NULL,

attr_value varchar(30) NULL,

CONSTRAINT cust_attr_pkey PRIMARY KEY (attr_id)

);

-- public.cust_attr foreign keys

ALTER TABLE public.cust_attr ADD CONSTRAINT cust_attr_attr_id_fkey FOREIGN KEY (attr_id)

REFERENCES public."attribute"(attr_id);

ALTER TABLE public.cust_attr ADD CONSTRAINT cust_attr_cust_id_fkey FOREIGN KEY (cust_id)

REFERENCES public.customer(cust_id);

-----------------------------------

-- conact

-----------------------------------

DROP TABLE IF EXISTS public.contact;

DROP SEQUENCE IF EXISTS public.contact_id_serial;

CREATE SEQUENCE public.contact_id_serial

INCREMENT BY 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1;

CREATE TABLE public.contact(

contact_id int4 NOT NULL DEFAULT nextval('contact_id_serial'::regclass),

cust_id int4 NULL,

contact_name varchar(30) NULL,

CONSTRAINT contact_pkey PRIMARY KEY (contact_id)

);

-----------------------------------

-- conatct_attr

-----------------------------------

drop table if exists contact_attr;

drop sequence if exists contact_attr_id_serial;

create sequence contact_attr_id_serial;

create table contact_attr(

contact_attr_id int primary key default nextval('contact_attr_id_serial'),

contact_id int4 not null,

attr_id int references "attribute" (attr_id),

attr_value varchar(30)

);

ALTER TABLE public.contact_attr ADD CONSTRAINT contact_attr_fkey

FOREIGN KEY (contact_id) REFERENCES public.contact(contact_id);

-------------------

-- category tables

-------------------

DROP table if exists public.category ;

CREATE TABLE public.category (

categoryid int4 NOT NULL,

"name" varchar(20) NULL,

CONSTRAINT catid_pkey PRIMARY KEY (categoryid)

);

-------------------

-- product tables

-------------------

drop TABLE IF EXISTS products cascade;

drop sequence if exists prod_id_serial;

create sequence prod_id_serial;

CREATE TABLE public.products(

prod_id int4 NOT NULL DEFAULT nextval('prod_id_serial'::regclass),

prod_name varchar(30) NULL,

prod_description varchar(100) NULL,

prod_note varchar(100) NULL,

prod_image varchar(50) NULL,

prod_in_stock int4 NULL,

prod_categoryid int4 NULL,

prod_price numeric(10, 2) NULL,

CONSTRAINT product_pkey PRIMARY KEY (prod_id)

);

-- public.products foreign keys

ALTER TABLE public.products ADD CONSTRAINT categorid_fkey

FOREIGN KEY (prod_categoryid) REFERENCES public.category(categoryid);

---------------------------------

-- prod_attr (product attribute)

---------------------------------

drop table if exists public.prod_attr;

drop sequence if exists prod_attr_id_serial;

create sequence prod_attr_id_serial;

CREATE TABLE public.prod_attr (

prod_attr_id int4 primary key DEFAULT nextval('prod_attr_id_serial'),

attr_id int4 ,

prod_id int4 references products(prod_id),

attr_name varchar(30) NULL,

attr_value varchar(50) NULL

);

------------------

-- orders table

------------------

CREATE SEQUENCE public.order_id_serial

INCREMENT BY 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1;

-- public.orders definition

-- Drop table

-- DROP TABLE public.orders;

CREATE TABLE public.orders (

order_id int4 NOT NULL DEFAULT nextval('order_id_serial'::regclass),

cust_id int4 NULL,

order_status int4 NOT NULL DEFAULT 1,

order_date date NULL DEFAULT now(),

order_desc varchar(500) NULL,

CONSTRAINT orders_pkey PRIMARY KEY (order_id)

);

-- order_status 1=open, 2=assigned, 3=pending, 4=closed 5=deleted

-- public.orders foreign keys

ALTER TABLE public.orders ADD CONSTRAINT orders_cust_id_fkey FOREIGN KEY (cust_id)

REFERENCES public.customer(cust_id);

CREATE SEQUENCE public.order_detail_id_serial

INCREMENT BY 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1;

-- public.order_detail definition

-- Drop table

-- DROP TABLE public.order_detail;

----------------------

-- order_detail table

----------------------

CREATE TABLE public.order_detail(

order_detail_id int4 NOT NULL DEFAULT nextval('order_detail_id_serial'::regclass),

order_id int4 NULL,

prod_id int4 NULL,

order_detail_date timestamp NULL DEFAULT now(),

order_detail_desc varchar(50) NULL

);

-- public.order_detail foreign keys

ALTER TABLE public.order_detail ADD CONSTRAINT order_detail_order_id_fkey

FOREIGN KEY (order_id) REFERENCES public.orders(order_id);

ALTER TABLE public.order_detail ADD CONSTRAINT order_detail_prod_id_fkey

FOREIGN KEY (prod_id) REFERENCES public.products(prod_id);

-------------------

-- activity table

-------------------

-- public.activity definition

-- Drop table

-- DROP TABLE public.activity;

CREATE TABLE public.activity (

act_id int4 NOT NULL DEFAULT nextval('activity_id_serial'::regclass),

order_id int4 NULL,

contact_id int4 NULL,

act_type varchar(10) NULL,

act_date timestamp NULL DEFAULT now(),

act_desc varchar(1000) NULL,

CONSTRAINT activity_act_type_check CHECK (((act_type)::text = ANY

((ARRAY['pc'::character varying, 'im'::character varying, 'em'::character varying,

'pm'::character varying])::text[]))),

CONSTRAINT activity_pkey PRIMARY KEY (act_id)

);

--pc = phonecall im=messaging em=email pm(physical meeting)

-- public.activity foreign keys

ALTER TABLE public.activity ADD CONSTRAINT activity_contact_id_fkey

FOREIGN KEY (contact_id) REFERENCES public.contact(contact_id);

ALTER TABLE public.activity ADD CONSTRAINT activity_order_id_fkey

FOREIGN KEY (order_id) REFERENCES public.orders(order_id);

------------------

-- service table

------------------

drop sequence if exists service_id_serial;

create sequence service_id_serial;

alter sequence service_id_serial restart with 101;

drop table if exists service;

CREATE TABLE service(

service_id int4 primary key default nextval('service_id_serial'),

order_id int4 references orders(order_id),

prod_id int4 references products(prod_id),

service_desc varchar(500) null,

service_tech varchar(30),

status int DEFAULT 1

);

--service.status: 1 open 2 close 3 pending

----------------------------------

-- service_attr(service attribute)

----------------------------------

drop table if exists service_attr;

drop sequence if exists service_attr_id_serial;

create sequence service_attr_id_serial;

alter sequence service_attr_id_serial restart with 101;

-- public.service_attr definition

-- Drop table

DROP TABLE public.service_attr;

CREATE TABLE public.service_attr (

service_attr_id int4 NOT NULL DEFAULT nextval('service_attr_id_serial'::regclass),

service_id int4 NULL,

cust_attr_id int4 NULL,

order_id varchar(15) NULL,

service_type varchar(4) NULL,

converse_date timestamptz NULL DEFAULT now(),

converse_tech varchar(30) NULL,

conversation varchar(500) NULL,

CONSTRAINT service_attr_pkey PRIMARY KEY (service_attr_id),

CONSTRAINT service_attr_service_type_check CHECK (((service_type)::text = ANY

((ARRAY['sfwr'::character varying, 'hdwr'::character varying])::text[]))));

);

-- public.service_attr foreign keys

ALTER TABLE public.service_attr ADD CONSTRAINT service_attr_cust_attr_id_fkey

FOREIGN KEY (cust_attr_id) REFERENCES public.cust_attr(cust_attr_id);

ALTER TABLE public.service_attr ADD CONSTRAINT service_attr_service_id_fkey

FOREIGN KEY (service_id) REFERENCES public.service(service_id);

CREATE SEQUENCE public.user_id_serial

INCREMENT BY 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1;

CREATE TABLE public.users (

userid int4 NOT NULL DEFAULT nextval('user_id_serial'::regclass),

pswd varchar(20) NULL,

email varchar(40) NULL,

firstname varchar(40) NULL,

lastname varchar(40) NULL,

address1 varchar(40) NULL,

address2 varchar(40) NULL,

zipcode varchar(10) NULL,

city varchar(40) NULL,

state varchar(40) NULL,

country varchar(40) NULL,

phone varchar(16) NULL,

CONSTRAINT users_pkey PRIMARY KEY (userid)

);

CREATE OR REPLACE FUNCTION public.add_customer(name text, custTypeId integer)

RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

customer_row customer%ROWTYPE;

BEGIN

insert into customer (cust_name,tid) values(name,custTypeId);

RETURN 0 ;

END;

$function$

-- customer delete function

CREATE OR REPLACE FUNCTION public.del_customer(name text)

RETURNS integer

LANGUAGE plpgsql

AS $function$

declare

custid integer;

customer_row customer%ROWTYPE;

BEGIN

DELETE FROM customer WHERE cust_name = name;

RETURN 0 ;

END;

$function$

-- contact insert function

CREATE OR REPLACE FUNCTION public.add_contact(

custid_var integer, contactname text)

RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

contact_row contact%ROWTYPE;

BEGIN

insert into contact (cust_id, contact_name) values(custid_var,contactname);

RETURN 0 ;

END;

$function$

-- contact delete function

CREATE OR REPLACE FUNCTION public.del_contact(contactid integer)

RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

contact_row contact%ROWTYPE;

BEGIN

DELETE FROM contact WHERE contact_id = contactid;

RETURN 0 ;

END;

$function$

-- contact_attr insert function

CREATE OR REPLACE FUNCTION public.add_contact_attr(

contact_id_var integer, attr_id_var integer, attr_value_var text)

RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

contact_attr_row contact_attr%ROWTYPE;

BEGIN

insert into contact_attr(contact_id, attr_id, attr_value) values

(contact_id_var, attr_id_var, attr_value_var);

RETURN 0 ;

END;

$function$

CREATE OR REPLACE FUNCTION public.add_order(custId integer, orderDesc text)

RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

contact_row orders%ROWTYPE;

BEGIN

insert into orders (cust_id,order_desc) values(custId,orderDesc);

RETURN 0 ;

END;

$function$

CREATE OR REPLACE FUNCTION public.add_order_detail(

orderId integer, prodId integer, orderDetailDesc text)

RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

order_detail order_detail%ROWTYPE;

BEGIN

insert into order_detail (order_id,prod_id,order_detail_desc)

values(orderId,prodId,orderDetailDesc);

RETURN 0 ;

END;

$function$

CREATE OR REPLACE FUNCTION public.add_product(

prodName text, prodDesc text,prodNote text,prodImage text,

prodInStock integer,prodCategory integer, prodPrice NUMERIC(10,2))

RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

product_row products%ROWTYPE;

BEGIN

insert into products (prod_name,prod_description,prod_note,prod_image,

prod_in_stock,prod_categoryid, prod_price) values(

prodName, prodDesc,prodNote,prodImage,prodInStock,prodCategory, prodPrice);

RETURN 0 ;

END;

$function$

CREATE OR REPLACE FUNCTION public.add_product_attr(

attrid integer, prodId integer, attrName text, attrValue text)

RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

prod_attr prod_attr%ROWTYPE;

BEGIN

insert into prod_attr(attr_id,prod_id,attr_name,attr_value)

values(attrid,prodId,attrName,attrValue);

RETURN 0 ;

END;

$function$

CREATE OR REPLACE FUNCTION public.add_service(

orderId integer, prodId integer, serviceDesc text, serviceTech text,

serviceStatus integer)

RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

service_row service%ROWTYPE;

BEGIN

insert into service (order_id,prod_id,service_desc,service_tech,status)

values(orderId, prodId,serviceDesc,serviceTech,serviceStatus);

RETURN 0 ;

END;

$function$

--------------------

--function usage-

--------------------

--customer table

select add_customer('casperbbq');

select del_customer('casperbbq');

--cust_attr table

--cust_id_var integer, attrid integer, attribute_value_var

-- 1=Hyperscale IO 3=office phone 408-781-6849

SELECT add_cust_attr(1,3,'408-781-6849')

--contact table

select add_contact(2,'Casper Chang') -- cust_id=2

select del_contact(7) -- contact_id = 7

--order table

SELECT add_order(2,'Casper''s first order')

--order_detail table

select add_order_detail(1,101,'testing testing testing...')

--end function usage

MADlib - (M)achine learning (A)nd (D)eep learning package

Install MADlib

Download MADlib RPMCopy apache-madlib-1.20.0-Centos7.rpm to all nodes

[root@mdw ~]# forcp /tmp/apache-madlib-1.20.0-CentOS7.rpm /tmp

install madlib rpm to all nodes

# master node

[root@mdw ~]# fornodes -m ' yum install -y /tmp/apache-madlib-1.20.0-CentOS7.rpm'

# worker nodes

[root@mdw ~]# fornodes -n ' yum install -y /tmp/apache-madlib-1.20.0-CentOS7.rpm'

# install madpack to ecom database

[root@mdw ~]# /usr/local/madlib/Versions/1.20.0/bin/madpack -p greenplum -c gpadmin@mdw:5432/ecom install

Naive Bayes Classification

Naive Bayes analysis predicts the likelihood of an outcome of a class variable, or category,based on one or more independent variables, or attributes. The class variable is a non-numeric

categorial variable, a variable that can have one of a limited number of values or categories.

The class variable is represented with integers, each integer representing a category.

For example, if the category can be one of “true”, “false”, or “unknown,” the values can be

represented with the integers 1, 2, or 3.

The attributes can be of numeric types and non-numeric, categorical, types. The training function

has two signatures – one for the case where all attributes are numeric and another for mixed numeric

and categorical types. Additional arguments for the latter identify the attributes that should be

handled as numeric values. The attributes are submitted to the training function in an array.

Creating External Tables

test

[root@mdw ext]# pwd

/opt/greenplum/ext

[root@mdw ext]# chmod 664 /var/log/messages

[gpadmin@mdw ext]# ln -sf /var/log/messages messages

[gpadmin@mdw ~]$ nohup gpfdist -p 8801 -d /opt/greenplum/ext -l /home/gpadmin/gpfdist.log &

Create External Table

# var.log.messages

drop external table if exists var_log_messages;

CREATE READABLE EXTERNAL table var_log_messages

(

msg text

)

location ('gpfdist://mdw:8801/messages')

format 'TEXT' ;

Test select from External Table

[gpadmin@mdw scripts]$ psql -d ecom

hyperscaleio=# select msg from var_log_messages where msg like '%mdw%'

Script for above select is located in /opt/greenplum/scripts

[gpadmin@mdw scripts]$ pwd

/opt/greenplum/scripts

[root@mdw scripts]# cat view_logs

#!/bin/bash

usage()

{

cat << EOF

usage: bash ./ -n host_name

-m master

-w worker

-a all

-h Brings up this menu

EOF

}

case $1 in

-m)

shift

psql -d ecom -f select_var_log_messages.mdw.sql

;;

-w)

shift

psql -d ecom -f select_var_log_messages.hdw.sql

;;

-a)

shift

psql -d ecom -f select_var_log_messages.all.sql

;;

*) usage

exit 1

esac

[gpadmin@mdw scripts]$ chmod 775 view_logs

[gpadmin@mdw scripts]$ cat select_var_log_messages.sql

select msg from var_log_messages;

Write "test" message to /var/log/message

[root@mdw log]# logger test

[gpadmin@mdw scripts]$ ./view_logs -m

Jul 25 03:32:01 mdw rsyslogd: [origin software="rsyslogd" swVersion="8.24.0-57.el7_9.1"

Jul 25 03:40:01 mdw systemd: Started Session 647 of user root.

...

Jul 25 11:30:01 mdw systemd: Started Session 717 of user root.

Jul 25 11:33:25 hdm01 root: test

(172 rows)

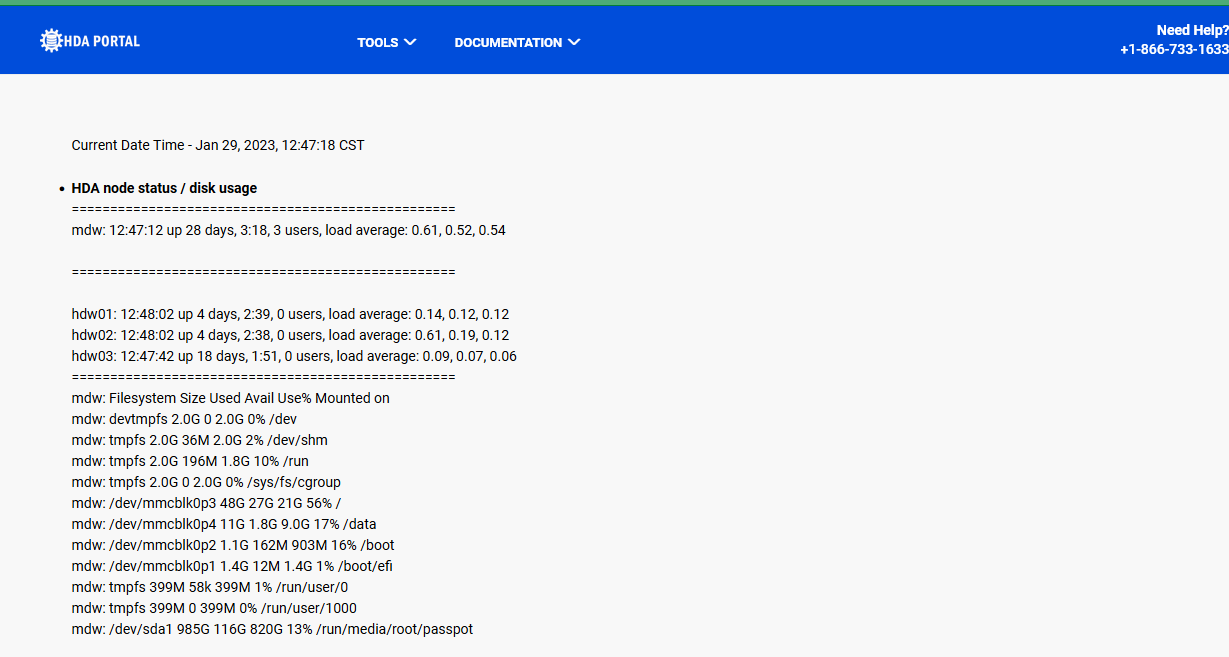

System Administration

Scripting Tips

Sort Process by Size descending orderps -elf |sort -k 9 -r |more

Explain

EXPLAIN displays the query plan that the Greenplum or Postgres Planner generates forthe supplied statement. Query plans are a tree plan of nodes. Each node in the plan represents a

single operation, such as table scan, join, aggregation or a sort.

[gpadmin@hdm01 ~]$ psql ecom

psql (9.4.24)

Type "help" for help.

shop=# explain select * from users

INFO: analyzing "public.categories"

INFO: Executing SQL: select pg_catalog.gp_acquire_sample_rows(41456, 10000, 'f');

INFO: ANALYZE estimated relpages=5 for index categories_pkey

INFO: analyzing "public.users"

INFO: Executing SQL: select pg_catalog.gp_acquire_sample_rows(40970, 10000, 'f');

INFO: ANALYZE estimated relpages=5 for index users_pkey

INFO: analyzing "public.products"

INFO: Executing SQL: select pg_catalog.gp_acquire_sample_rows(41477, 10000, 'f');

INFO: ANALYZE skipping index products_pkey since relation products has no rows.

INFO: analyzing "public.orders"

INFO: Executing SQL: select pg_catalog.gp_acquire_sample_rows(41662, 10000, 'f');

INFO: ANALYZE skipping index orders_pkey since relation orders has no rows.

Analyze

analyze collects statistics about the contents of tables in the database,and stores the results in the system catalog table pg_statistic. Greenplum database uses these

statistics to determine the best execution plan for the queries.

ANALYZE [VERBOSE] [ROOTPARTITION [ALL] ] [table [ (column [, ...] ) ]]

[gpadmin@mdw ~]$ psql ecom

hyperscaleio=# analyze verbose;

INFO: analyzing "pg_catalog.pg_authid"

INFO: "pg_authid": scanned 1 of 1 pages, containing 7 live rows and 0 dead rows; 7 rows in sample, 7 estimated total rows

INFO: ANALYZE estimated relpages=2 for index pg_authid_rolname_index

INFO: ANALYZE estimated relpages=2 for index pg_authid_oid_index

INFO: ANALYZE estimated relpages=2 for index pg_authid_rolresqueue_index

INFO: ANALYZE estimated relpages=2 for index pg_authid_rolresgroup_index

INFO: analyzing "pg_catalog.pg_user_mapping"

...

Vacuumdb

vacuumdb cleans and analyzes a PostgreSQL database.vacuumdb [OPTION]... [DBNAME]

Options:

-a, --all vacuum all databases

-d, --dbname=DBNAME database to vacuum

-e, --echo show the commands being sent to the server

-f, --full do full vacuuming

[gpadmin@mdw ~]$ vacuumdb ecom

Down Segment

Identify and fix the problem(typically an HW/OS related).Run gprecoverseg from master to recover the segment node.

---[gpadmin@mdw ~]$ gpstop -r

Perfrom full recovery.

---[gpadmin@mdw ~]$ gprecoverseg -F

Run gprecoverseg with the -r option to return the segments to their preferred roles.

---[gpadmin@mdw ~]$ gprecoverseg -r

After rebalancing, run gpstate -e to confirm all segments are in their preferred roles.

---[gpadmin@mdw ~]$ gpstate -e

...

20210802:12:33:35:003059 gpstate:mdw:gpadmin-[INFO]:-All segments are running normally

Check segment status with one of the following command:

---select preferred_role,mode,status,port,hostname,address,datadir from pg_catalog.gp_segment_configuration

---[gpadmin@hdm01 ~]$ gpstate -m

Summary

--- gpstate -s = Greenplum Array Configuration details

--- gpstate -m = Mirror Segments in the system and their status

--- gpstate -c = To see the primary to mirror segment mappings

--- gpstate -f = To see the status of the standby master mirror:

--- gpstate -e = show segments with mirror status issues

Reference

https://gpdb.docs.pivotal.io/vxrail/1-0/index.html

https://greenplum-kubernetes.docs.pivotal.io/2-3/index.html

https://greenplum-kubernetes.docs.pivotal.io/2-3/deploy-operator.html

Move Mirror Segment

[gpadmin@mdw ~]$ cat move_cmdw|50000|/data/mirror/gpseg2 mdw|50000|data2/mirror/gpseg

[gpadmin@mdw ~]$ gpmovemirrors --verbose --input move_c

Dump and Restore Data

#Database create script[gpadmin@mdw Downloads]$ pg_dump --create shop >shop.sql

#Database data

[gpadmin@mdw Downloads]$ pg_dump -a shop >shop.dat

[gpadmin@mdw Downloads]$ ls -l shop.dat

-rw-rw-r-- 1 gpadmin gpadmin 3549 Mar 18 20:10 shop.dat

Delete Greenplum Instance

[gpadmin@mdw gpconfigs]$ gpdeletesystemInstall and Configure pxeboot

Install and configure pxe-boot -

Install required packages

[root@mdw ~]# yum install -y dhcp tftp tftp-server syslinux vsftpd xinetd

Copy required network boot files in ‘/var/lib/tftpboot/’

[root@mdw xinetd.d]# cp -v /usr/share/syslinux/pxelinux.0 /var/lib/tftpboot/

[root@mdw xinetd.d]# cp -v /usr/share/syslinux/menu.c32 /var/lib/tftpboot

[root@mdw xinetd.d]# cp -v /usr/share/syslinux/memdisk /var/lib/tftpboot

[root@mdw xinetd.d]# cp -v /usr/share/syslinux/mboot.c32 /var/lib/tftpboot

[root@mdw xinetd.d]# cp -v /usr/share/syslinux/chain.c32 /var/lib/tftpboot

[root@mdw xinetd.d]# mkdir /var/lib/tftpboot/pxelinux.cfg

[root@mdw xinetd.d]# mkdir /var/lib/tftpboot/networkboot

Greenplum

Greenplum documentation

Install Greenplum Database

Download gpdb from https://github.com/greenplum-db/gpdb/releases/tag/6.21.3

[root@mdw greenplum]# yum install -y \

/tmp/greenplum-db-6.21.3-rhel7-x86_64.rpm

[root@mdw greenplum]# forcp /tmp/greenplum-db-6.21.3-rhel7-x86_64.rpm /tmp

[root@mdw greenplum]# fornodes -n 'yum install -y greenplum-db-6-21.3-rhel7.x86_64.rpm'

Add the following to gpadmin's .bashrc

------------------------------------------------------------

source /opt/greenplum/greenplum-cc/gpcc_path.sh

export LD_PRELOAD=/lib64/libz.so.1 ps

source /usr/local/greenplum-db/greenplum_path.sh

export MASTER_DATA_DIRECTORY=/data/master/gpseg-1

source /usr/local/greenplum-cc/gpcc_path.sh

------------------------------------------------------------

[gpadmin@mdw ~] cp $GPHOME/docs/cli_help/gpconfigs/gpinitsystem_config \

/home/gpadmin/gpconfigs/gpinitsystem_config

# Modify the following parameters in gpinitsystem_config:

declare -a DATA_DIRECTORY=(/data/primary)

MACHINE_LIST_FILE=/home/gpadmin/gpconfigs/hostfile

[root@mdw ~]# mkdir /data/primary

[root@mdw ~]# mkdir /data/master

[root@mdw ~]# fornodes -n 'mkdir -p /data/primary'

[root@mdw ~]# fornodes -n 'chown -R gpadmin:gpadmin /data/'

[root@mdw ~]# chown -R gpadmin:gpadmin /data

[root@mdw ~]# su - gpadmin

[gpadmin@mdw~]# gpinitsystem -c gpconfigs/gpinitsystem_config -h gpconfigs/hostfile

Enable mirroring

[gpadmin@mdw~]# gpaddmirrorsrestart Greenplum to make sure all connections are terminated

gpstop -ar

Enter mirror segment data directory location 1 of 1 >

/data/mirror

. . .

[INFO]:-Data synchronization will continue in the background.

Use gpstate -s to check the resynchronization progress.

Start Greenplum Command Center

Option 1:

[gpadmin@mdw ~]$ /bin/bash -c 'source /usr/local/greenplum-cc/gpcc_path.sh && \

/usr/local/greenplum-cc/bin/gpcc start'

Option 2:

[gpadmin@mdw ~]$ source /usr/local/greenplum-cc/gpcc_path.sh

[gpadmin@mdw ~]$ gpcc start

Starting the gpcc agents and webserver...

Agent successfully started on 3/3 hosts

View Greenplum Command Center at http://mdw:28080

[gpadmin@mdw ~]$ cat .pgpass

*:5432:gpperfmon:gpmon:xxxxxxxx

*:5432:gpperfmon:gpmon:xxxxxxxx

JDBC

Download greenplum.jar

https://network.pivotal.io/products/vmware-tanzu-greenplum#/ \

(PROGRESS_DATADIRECT_JDBC_DRIVER_PIVOTAL_GREENPLUM_6.0.0+181)

from Pivotal Network

ln -sf /usr/bin/python3 /usr/bin/python

Download jaydbeapi

https://github.com/baztian/jaydebeapi/archive/refs/heads/master.zip

unzip jaydebeapi and install with python setup.py install

DBeaver

Add gpadmin to /data/master/gpseg-1/pg_hba.conf

# TYPE DATABASE USER ADDRESS METHOD

host all gpadmin 192.168.152.188/32 trust

Download Dbeaver from https://dbeaver.io/files/dbeaver-ce-latest-x86_64-setup.exe

Hyperscale Business Model

b>customer

drop sequence if exists cust_id_serial;

create sequence cust_id_serial;

drop table if exists customer;

create table customer

(

cust_id int primary key default nextval('cust_id_serial'),

cust_name varchar(30)

);

insert into customer values(nextval('cust_id_serial'), 'Hyperscale IO');

select * from customer ;

| cust_id | cust_name |

|---|---|

| 3 | Hyperscale IO |

drop sequence if exists cust_id_serial;

create sequence cust_attr_id_serial;

drop table if exists cust_attr;

create table cust_attr

(

attr_id int primary key default nextval('cust_attr_id_serial'),

cust_id int references customer(cust_id),

attr_name varchar(30),

attr_value varchar(30)

);

insert into cust_attr values(nextval('cust_attr_id_serial') ,3, 'contact_name','Brady Chang');

insert into cust_attr values(nextval('cust_attr_id_serial') ,3, 'contact_name','Casper Chang');

select * from cust_attr ca ;

| attr_id | cust_id | attr_name | attr_value |

|---|---|---|---|

| 3 | 3 | contact_name | Brady Chang |

| 4 | 3 | contact_name | Casper Chang |

drop sequence if exists contact_attr_id_serial;

create sequence contact_attr_id_serial;

drop table if exists contact_attr;

create table contact_attr

(

contact_attr_id int primary key default nextval('contact_attr_id_serial'),

attr_id int references cust_attr(attr_id),

attr_name varchar(30),

attr_value varchar(30)

);

insert into contact_attr values(nextval('contact_attr_id_serial'),3,'cell','785-763-1300');

insert into contact_attr values(nextval('contact_attr_id_serial'),4,'cell','408-781-6849');

select * from contact_attr;

| contact_attr_id | attr_id | attr_name | attr_value |

|---|---|---|---|

| 1 | 3 | cell | 785-764-1300 |

| 1 | 4 | cell | 408-781-6849 |

Customer insert

CREATE OR REPLACE FUNCTION public.add_customer(name text)

RETURNS integer

LANGUAGE plpgsql

AS $function$

DECLARE

customer_row customer%ROWTYPE;

BEGIN

insert into customer (cust_name) values(name);

RETURN 0 ;

END;

$function$

To add cusstomer ---

select add_customer('casper101')

Customer select

select c.cust_name, ca.attr_name, ca.attr_value, cona.attr_name, cona.attr_value

from customer c, cust_attr ca , contact_attr cona

where c.cust_id =ca.cust_id and cona.attr_id=ca.attr_id ;

| cust_name | attr_name | attr_value | attr_name | attr_value |

|---|---|---|---|---|

| Hyperscale IO | contact_name | Brady Chang | cell | 785-764-1300 |

| Hyperscale IO | contact_name | Casper Chang | cell | 408-781-6849 |

# Product

drop sequence if exists prod_id_serial;

create sequence prod_id_serial;

CREATE TABLE public.product (

prod_id int4 NOT NULL DEFAULT nextval('prod_id_serial'),

prod_name varchar(30) NULL,

CONSTRAINT product_pkey PRIMARY KEY (prod_id)

);

select * from product

drop sequence if exists prod_attr_id_serial;

create sequence prod_attr_id_serial;

CREATE TABLE public.prod_attr (

attr_id int4 NOT NULL DEFAULT nextval('prod_attr_id_serial'),

prod_id int4 references product(prod_id),

attr_name varchar(30) NULL,

attr_value varchar(50) NULL,

CONSTRAINT prod_attr_pkey PRIMARY KEY (attr_id)

);

Product select

select p.prod_id , p.prod_name , pa.attr_name , pa.attr_value

from product p, prod_attr pa where p.prod_id =pa.prod_id;

| prod_id | prod_name | attr_name | attr_value |

|---|---|---|---|

| 1 | Hyperscale Data Appliance | version | 1.00 |

| 1 | Hyperscale Data Appliance | analytic engine | spark-3.0.2-bin-hadoop3.2 |

| 1 | Hyperscale Data Appliance | database | greenplum-db-6-6.18.2-1.el7.x86_64 |

| 1 | Hyperscale Data Appliance | operating system | CentOS 7.7.1908 |

Creating External Tables

Each csv file must resides in it's own directory

[root@hdm01 ext]# pwd

/opt/greenplum/ext

[root@hdm01 ext]# chmod 664 /var/log/messages

[gpadmin@hdm01 ext]# ln -sf /var/log/messages messages

[gpadmin@hdm01 ~]$ nohup gpfdist -p 8801 -d /opt/greenplum/ext -l /home/gpadmin/gpfdist.log &

Create External Table

# var.log.messages

drop external table if exists var_log_messages;

CREATE READABLE EXTERNAL table var_log_messages

(

msg text

)

location ('gpfdist://hdm01:8801/messages')

format 'TEXT' ;

Test select from External Table

[gpadmin@mdw scripts]$ psql -d ecom

hyperscaleio=# select msg from var_log_messages where msg like '%mdw%'

Script for above select is located in /opt/greenplum/scripts

[gpadmin@mdw scripts]$ pwd

/opt/greenplum/scripts

[root@mdw scripts]# cat view_logs

#!/bin/bash

usage()

{

cat << EOF

usage: bash ./ -n host_name

-m master

-w worker

-a all

-h Brings up this menu

EOF

}

case $1 in

-m)

shift

psql -d ecom -f select_var_log_messages.mdw.sql

;;

-w)

shift

psql -d ecom -f select_var_log_messages.hdw.sql

;;

-a)

shift

psql -d ecom -f select_var_log_messages.all.sql

;;

*) usage

exit 1

esac

[gpadmin@hdm01 scripts]$ chmod 775 view_logs

[gpadmin@hdm01 scripts]$ cat select_var_log_messages.sql

select msg from var_log_messages;

Write "test" message to /var/log/message

[root@hdm01 log]# logger test

[gpadmin@hdm01 scripts]$ ./view_logs -m

Jul 25 03:32:01 hdm01 rsyslogd: [origin software="rsyslogd" swVersion="8.24.0-57.el7_9.1"

Jul 25 03:40:01 hdm01 systemd: Started Session 647 of user root.

...

Jul 25 11:30:01 hdm01 systemd: Started Session 717 of user root.

Jul 25 11:33:25 hdm01 root: test

(172 rows)

System Administration

vacuumdb cleans and analyzes a PostgreSQL database.

[gpadmin@hdm01 ~]$ vacuumdb ecom

segment is down

Identify and fix the problem(typically an HW/OS related).

Run gprecoverseg from master to recover the segment node.

---[gpadmin@hdm01 ~]$ gpstop -r

Perfrom full recovery.

---[gpadmin@hdm01 ~]$ gprecoverseg -F

Run gprecoverseg with the -r option to return the segments to their preferred roles.

---[gpadmin@hdm01 ~]$ gprecoverseg -r

After rebalancing, run gpstate -e to confirm all segments are in their preferred roles.

---[gpadmin@hdm01 ~]$ gpstate -e

...

20210802:12:33:35:003059 gpstate:hdm01:gpadmin-[INFO]:-All segments are running normally

Check segment status with one of the following command:

---select preferred_role,mode,status,port,hostname,address,datadir from pg_catalog.gp_segment_configuration

---[gpadmin@hdm01 ~]$ gpstate -m

Summary

--- gpstate -s = Greenplum Array Configuration details

--- gpstate -m = Mirror Segments in the system and their status

--- gpstate -c = To see the primary to mirror segment mappings

--- gpstate -f = To see the status of the standby master mirror:

--- gpstate -e = show segments with mirror status issues

Python

Install Python 3.6.8 on mdw(CentOS 7.9.2004)

[root@mdw ~]# yum install -y epel-release

[root@mdw ~]# yum update

[root@mdw ~]# yum install python3

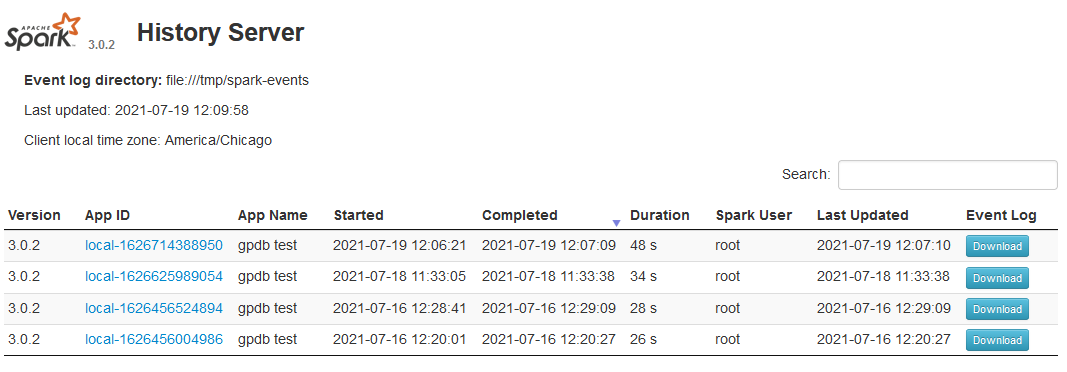

Apache Spark

Install Hadoop

yum install -y java-1.8.0-openjdk

fornodes -n 'yum install -y java-1.8.0-openjdk'

[root@mdw Downloads]# cd /root/Downloads

[root@mdw Downloads]# wget https://archive.apache.org/dist/hadoop/common/hadoop-3.2.2/hadoop-3.2.2.tar.gz

##### unpack to directory of your choice(/opt)

[root@mdw opt]# tar xvfz hadoop-3.2.2.tar.gz

##### set HADOOP_HOME to point to that directory.

[root@mdw opt]# echo 'export HADOOP_HOME=/opt/hadoop-3.2.2' >>/etc/profile

##### add $HADOOP_HOME/lib/native to LD_LIBRARY_PATH.

[root@mdw ~]# echo 'export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$HADOOP_HOME/lib/native' >>/etc/profile

Install Apache Spark

[root@mdw Downloads]# wget https://archive.apache.org/dist/spark/spark-3.0.2/spark-3.0.2-bin-hadoop3.2.tgz

[root@mdw Downloads]# cd /opt

[root@mdw opt]# tar xvfz spark-3.0.2-bin-hadoop3.2.tgz

[root@mdw opt]# ln -sf spark-3.0.2-bin-hadoop3.2 spark

Repeat the above steps for all hdw nodes

[root@mdw opt]# forcp -r /root/Downloads/spark-3.0.2-bin-hadoop3.2.tgz /root/Downloads

[root@mdw opt]# fornodes -n 'cd /opt && tar xvfz /root/Downloads/spark-3.0.2-bin-hadoop3.2.tgz'

[root@mdw opt]# fornodes -n 'cd /opt && ln -sf spark-3.0.2-bin-hadoop3.2 spark'

[root@mdw opt]# fornodes -n 'ls -l /opt'

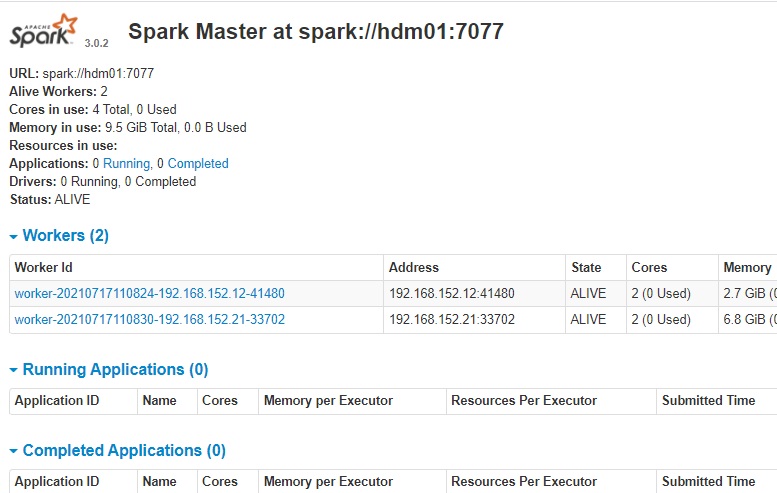

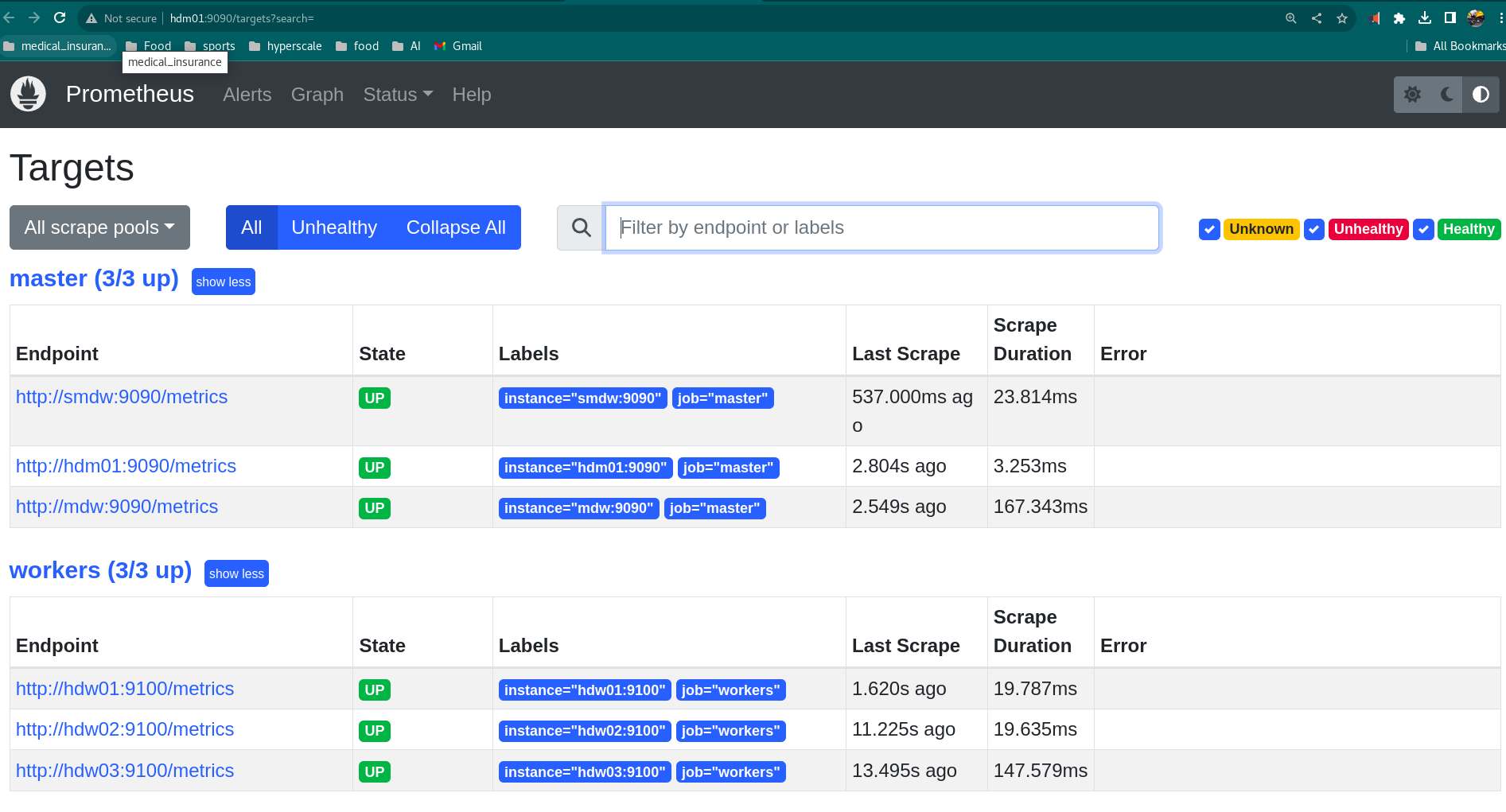

Start Apache Spark

Master Node (hdw02:8080)

[root@hdw02 opt]# cd /opt/spark

[root@hdw02 spark]# cat conf/spark-env.sh

#!/usr/bin/env bash

GPFDIST_PORT="12900, 12901, 12902"

[root@hdw02 spark]# sbin/start-master.sh

starting org.apache.spark.deploy.master.Master,